The Measurement Problem: How Digital Tools Create More Problems Than They Solve

Post Contents

- The Metrics We Optimize For

- The Selective Reporting of Benefits, Not Harms

- The Psychological Impact of Attention Harvesting

- Fragmented Attention

- Engineered Anxiety

- Addiction by Design

- Reality Distortion

- The Absence of Meaningful Guardrails

- The Metrics We Don't Measure

- The Social Media Example

- The Business Model Dilemma

- Reimagining Success Metrics

- Systemic Change vs. Individual Choice

- Potential Paths Forward

- 1. Regulatory Frameworks

- 2. Investor Pressure

- 3. Alternative Business Models

- 4. Consumer Education and Demand

- The Next Generation of Tools

- Conclusion: Measuring What Matters

Social media connected us across vast distances, then meticulously engineered our addiction through infinite scrolling and dopamine-triggering notifications. Productivity apps organized our tasks, then multiplied them while bombarding us with reminders. Communication tools made instantaneous connection possible, then created the expectation of perpetual availability.

Why do our digital tools so consistently solve one problem while creating three more? The answer lies not in technological limitations, but in what we choose to measure.

The Metrics We Optimize For

Throughout the tech industry, success is measured in a remarkably consistent set of metrics:

- Monthly active users: How many people use the platform regularly

- Engagement time: How many minutes (or hours) users spend on the platform

- Retention rates: How many users keep coming back

- Revenue per user: How much money each user generates

- Growth percentages: How quickly all these numbers increase

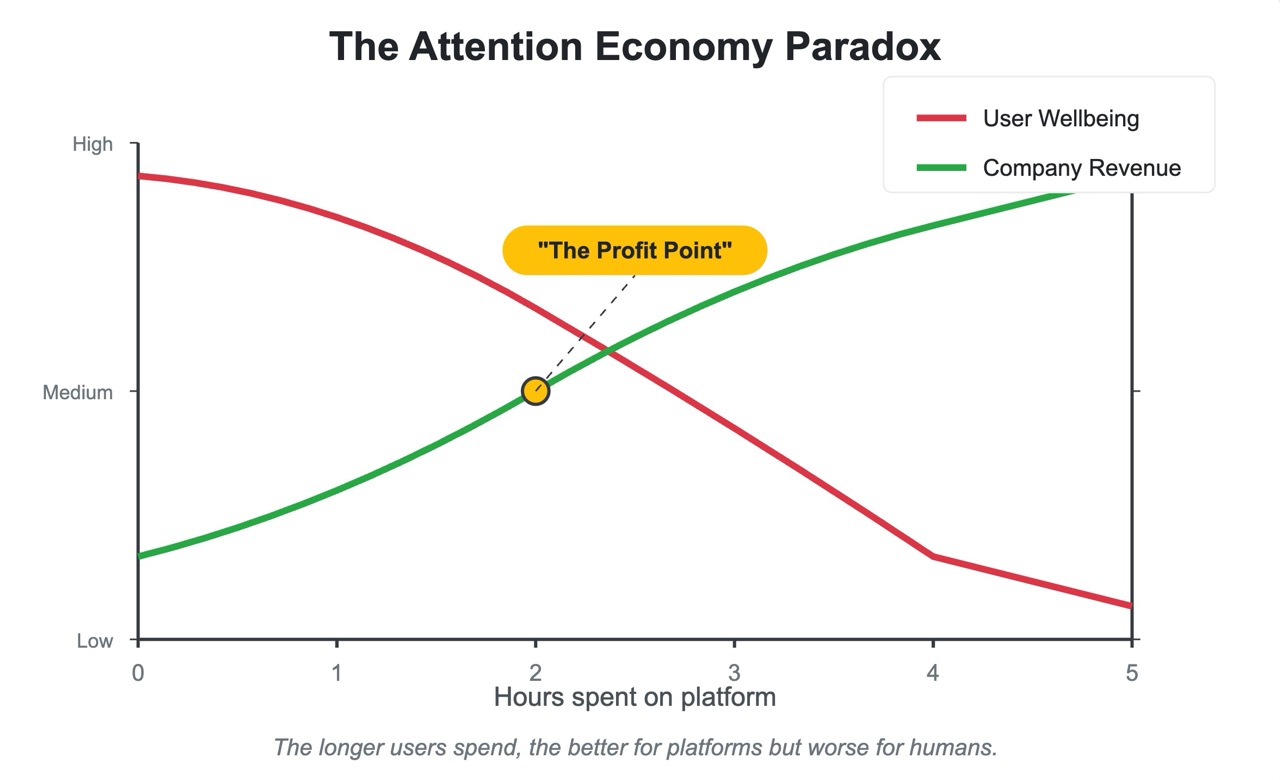

These metrics are easily quantifiable, clearly communicable to investors, and directly tied to business outcomes. They're also fundamentally disconnected from whether a platform actually improves people's lives.

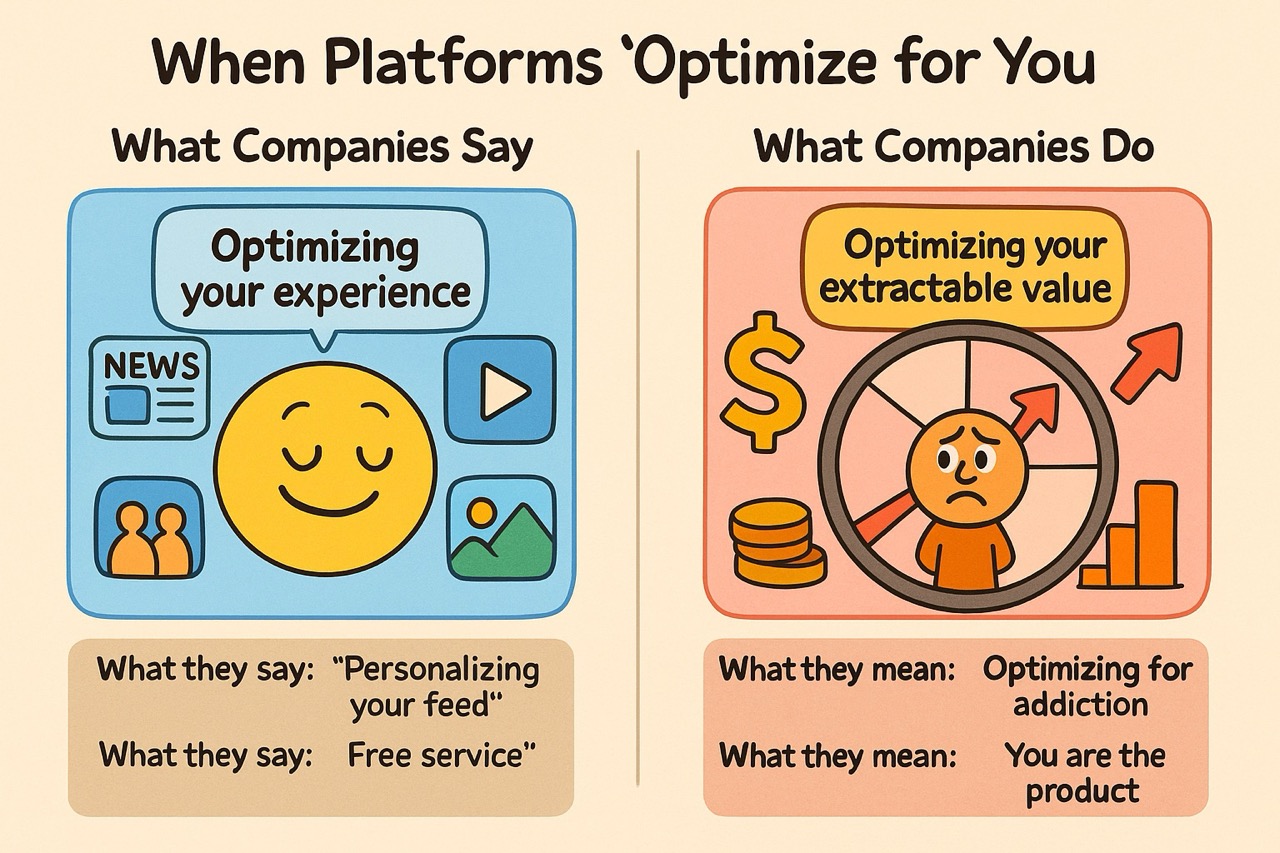

As computer scientist Cal Newport puts it: "These companies offer you shiny treats in exchange for minutes of your attention and bits of your personal data, which they then sell to the highest bidder."

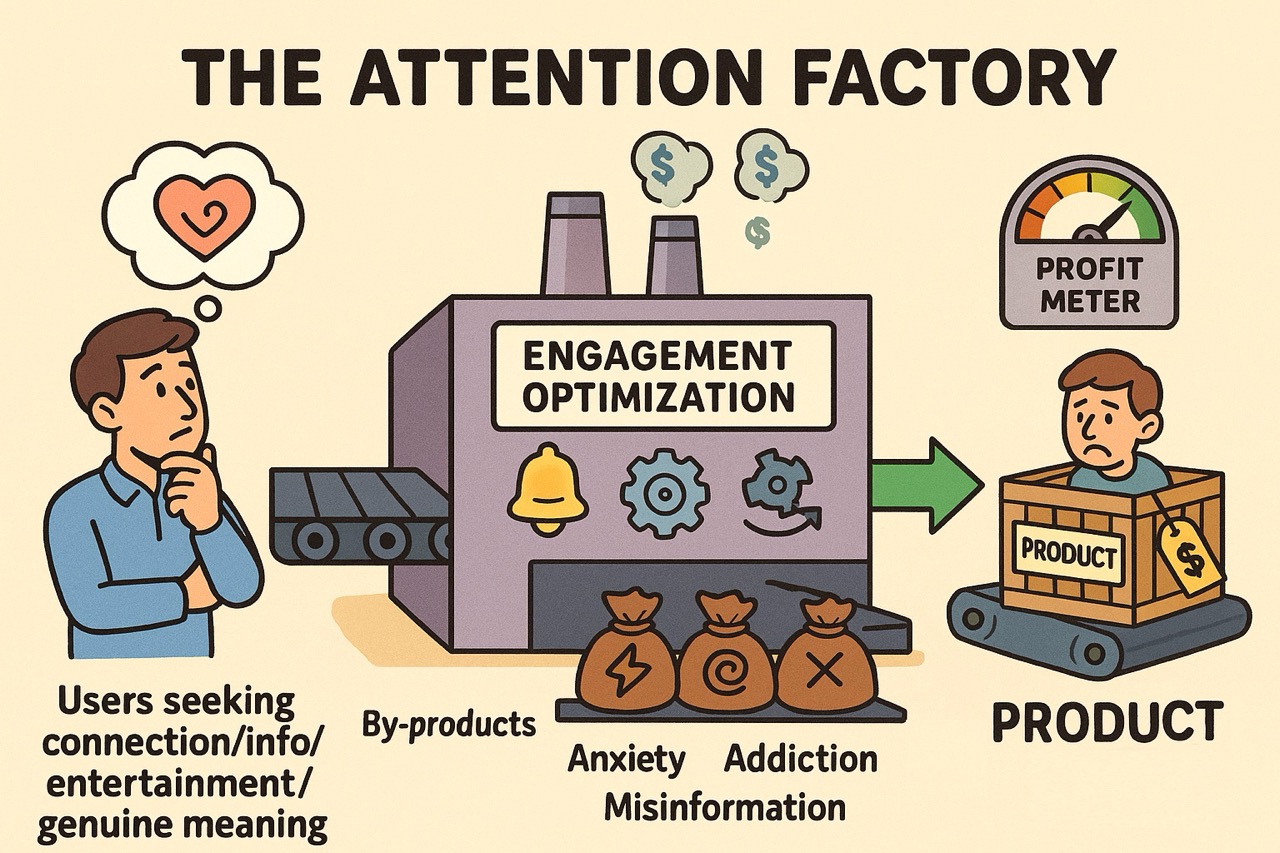

The problem isn't that tech companies are malevolent—it's that they're responding rationally to the incentives built into our economic system. When success equals engagement and growth, products will inevitably evolve to capture more attention regardless of the human cost.

The Selective Reporting of Benefits, Not Harms

Tech companies have mastered the art of selective measurement. Their annual reports, keynote presentations, and press releases meticulously document positive metrics: connections made, information shared, convenience delivered. What they systematically ignore are the psychological costs.

You'll never see a quarterly report that mentions:

- Anxiety induced: The estimated increase in user stress levels

- Attention degraded: The measured impact on users' ability to focus

- Sleep disrupted: Hours of rest lost due to nighttime scrolling

- Reality perception distorted: How platform usage shapes users' views of themselves and others

This selective focus isn't accidental—it's strategic. By measuring only the benefits while ignoring the harms, companies create a fundamentally distorted picture of their value creation. The apparent symmetry of the "value exchange" disappears when the full balance sheet includes psychological costs.

The Psychological Impact of Attention Harvesting

The attention economy has profound consequences for mental health and cognitive functioning that largely go unmeasured:

Fragmented Attention

Platforms actively train users to consume content in increasingly smaller chunks, with TikTok's evolution to shorter videos being a prime example. Studies show that after extended social media use, people struggle with sustained attention on tasks requiring deep focus. This impact isn't a side effect—it's a direct consequence of products designed to maximize engagement through constant novelty and interruption.

Engineered Anxiety

Notification systems, social comparison features, and artificial scarcity mechanics (like "limited time offers" and "disappearing content") deliberately exploit psychological vulnerabilities to drive engagement. These design patterns capitalize on FOMO (fear of missing out) and social validation to keep users checking and posting.

Addiction by Design

Features like infinite scroll, autoplay, and algorithmic content selection aren't neutral—they're carefully crafted using principles from behavioral psychology to maximize addiction. The variable reward patterns used in social feeds mirror the mechanisms that make gambling addictive. These aren't accidents or unfortunate side effects; they're the product of deliberate optimization for engagement metrics.

Reality Distortion

Recommendation algorithms create filter bubbles that systematically distort users' perception of reality. By optimizing for engagement rather than accuracy or wellbeing, these systems often promote content that triggers strong emotional reactions over content that provides balanced information. This distortion has documented effects on everything from body image to political polarization.

The Absence of Meaningful Guardrails

Perhaps most telling is what platforms choose not to build. Most tech products provide virtually no meaningful guardrails against harmful usage patterns:

- After five hours of continuous scrolling, most platforms won't suggest taking a break

- Recommendation systems don't reduce the emotional intensity of content when they detect a user spiraling into negative emotional states

- Usage dashboards that might promote healthier patterns are buried deep in settings rather than prominently displayed

- Notification controls default to maximum interruption rather than minimum disruption

When platforms do introduce "digital wellbeing" features, they typically:

- Require opt-in rather than being enabled by default

- Can be dismissed with a single tap

- Are dramatically outweighed by dozens of other features designed to maximize engagement

- Focus on creating the appearance of responsibility rather than meaningfully changing usage patterns

Compare this to other industries where guardrails are standard: gambling systems have mandatory cooling-off periods; alcohol has serving limits; even children's television programs have restrictions on advertising. Yet digital platforms—despite having unprecedented data about usage patterns and the technical ability to implement safeguards—consistently choose not to do so.

This isn't a limitation of technology but a direct consequence of incentive structures that reward maximizing use regardless of impact.

The Metrics We Don't Measure

Consider how different our digital landscape might look if platforms were required to track and optimize for metrics like:

- Net time saved vs. consumed: Does this tool give people more time or take it away?

- Problem resolution rate: What percentage of users actually solve the problem they came with?

- Psychological wellbeing impact: Does using this tool improve or degrade mental health?

- Attention integrity: Does this tool help users maintain focus or fragment it?

- Relationship quality: Does this platform enhance or diminish meaningful human connections?

These metrics matter enormously to human flourishing but remain largely unmeasured, unreported, and unoptimized for.

The Social Media Example

Social media provides the clearest illustration of this measurement problem. The original promise was compelling: connect with friends, share your life, discover new ideas. The core problem being solved—human connection across distance—was meaningful and valuable.

Yet as these platforms evolved, they systematically introduced features explicitly designed to maximize engagement metrics:

- Infinite scrolls replaced pagination, removing natural stopping points

- Algorithmic feeds prioritized engagement over chronology or user choice

- Notification systems became increasingly engineered to trigger dopamine release

- Content recommendation engines optimized for time-on-site rather than genuine value

- Like counts and other social metrics created powerful social feedback loops

Each of these features makes perfect sense when optimizing for engagement and growth. None makes sense when optimizing for user wellbeing or time well spent.

The result is a collection of platforms that successfully solved the connection problem while creating entirely new problems around addiction, mental health, privacy, misinformation, and attention fragmentation.

The Business Model Dilemma

At the root of this measurement problem lies a fundamental business model challenge. Most digital platforms rely on one of two revenue sources:

- Advertising: Sell user attention and data to advertisers

- Subscription/purchases: Charge users directly for value received

The advertising model inherently incentivizes maximizing attention capture and data collection. The subscription model theoretically aligns better with user interests, but still typically measures success through growth and engagement rather than problem resolution or wellbeing improvement.

What's missing is a business model that directly rewards measurable improvements in users' lives.

Reimagining Success Metrics

Imagine a world where digital platforms had to report metrics like:

- Time returned ratio: For every minute spent using our product, how many minutes did we save users?

- Problem resolution score: What percentage of user problems were successfully solved?

- Wellbeing impact factor: Measured through validated psychological instruments, how did our product affect user mental health?

- Attention respect index: How often did our product interrupt users versus respecting their focus?

- Genuine connection quotient: Did our platform facilitate meaningful human interactions?

These aren't impossible to measure. They're simply not prioritized because they don't directly drive quarterly results under current business models.

Systemic Change vs. Individual Choice

The common response to these concerns is to put the responsibility on individual users: "Just use technology mindfully. Turn off notifications. Set time limits."

This advice, while well-intentioned, misunderstands the scale of the problem. We're not facing a crisis of individual willpower but a systemic misalignment of incentives. Asking individuals to resist systems explicitly engineered to capture their attention is like asking people to avoid gaining weight while the food supply is systematically filled with addictive, hyper-palatable foods designed by scientists to override satiety signals.

Individual discipline matters, but system design matters more.

Potential Paths Forward

Despite these challenges, several promising directions could help realign technology with human wellbeing:

1. Regulatory Frameworks

Just as food products must include nutritional information, digital products could be required to measure and report their impacts on user wellbeing. The EU's Digital Services Act represents a step in this direction, requiring greater transparency around algorithms and content moderation.

2. Investor Pressure

As ESG (Environmental, Social, Governance) metrics gain importance in investment decisions, "digital wellbeing impact" could become a standard consideration. Some venture capital firms are already prioritizing more humanistic metrics when evaluating potential investments.

3. Alternative Business Models

New models are emerging that better align company success with user wellbeing:

- Usage-based pricing that rewards efficiency rather than engagement

- Outcome-based models where payment depends on successful problem resolution

- Public benefit corporations that explicitly balance profit with social good

- Open-source, community-supported platforms that prioritize user control

4. Consumer Education and Demand

As users become more aware of how their attention and data are being monetized, demand grows for alternatives that respect their time and wellbeing. This creates market opportunities for tools designed around fundamentally different metrics.

The Next Generation of Tools

A few pioneering tools are already experimenting with alternative metrics and models:

- Productivity tools that measure success by how quickly users can complete tasks and close the app

- Social platforms that prioritize meaningful interactions over quantity of engagement

- Communication tools designed to respect attention and reduce rather than multiply notifications

- News and information services optimized for understanding rather than engagement

These remain exceptions rather than the rule, but they demonstrate that alternative approaches are possible.

Conclusion: Measuring What Matters

Technology isn't inherently problematic—it's the measurement systems and incentive structures we've built around it that drive its evolution toward addiction rather than wellbeing.

The first step toward better digital tools is recognizing that our current metrics are inadequate. We're measuring what's easy to measure and financially rewarding to optimize, not what actually matters for human flourishing.

The platforms that will truly serve humanity won't be those that maximize engagement, growth, and revenue alone. They'll be those that develop sophisticated ways to measure their actual impact on users' lives—and then continuously optimize to improve those impacts, even when doing so might reduce traditional growth metrics.

Until we solve this measurement problem, we'll continue creating tools that promise to simplify our lives while actually complicating them, promising connection while delivering isolation, and promising empowerment while inducing addiction.

The good news? This is a solvable problem. Not through better code, but through better metrics that align technology with human wellbeing. The question is whether we have the collective will to demand systems measured by their contribution to human flourishing rather than their ability to capture human attention.

What metrics would you want your favorite digital tools to optimize for? How might they change if they prioritized your wellbeing over your engagement?